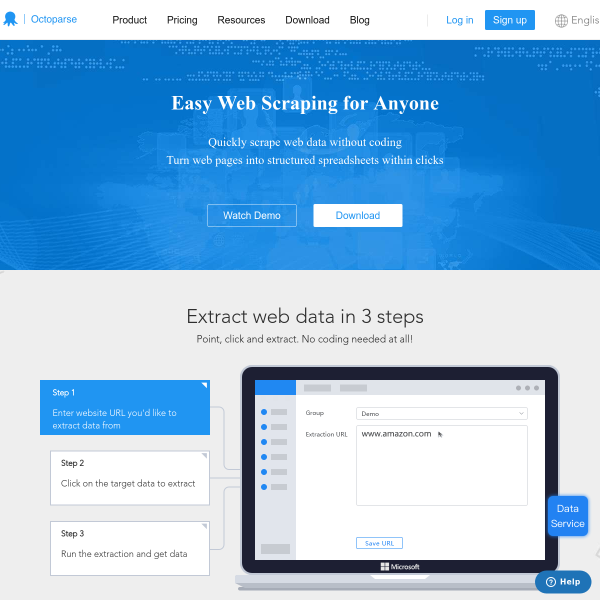

Under Advanced Mode, when you interact with the web page in the built-in browser, Octoparse responds to you by offering notices and available activities in Action Tips. While building a new task, usually you will begin by selecting the data you want on the web page for Octoparse to scrape. Interact with the web page in the built-in browser - to capture any web data with simple clicks Now, let's start building the workflow together.ġ. By turning on the Workflow Mode, you would have a better picture of what you are doing with your task and avoid yourself from messing up the steps. You can use the on-and-off button at the upper right corner to turn on the Workflow Mode. Normally, Octoparse would have you entered the Select Mode by default. Under Advanced Mode, the task configuration interface can be switched between two modes: the Select Mode and the Workflow Mode. Octoparse executes every action configured in the workflow to complete your data collection. The most critical part of a task is the workflow for your specific data extraction requirements. Interact with webpage in the built-in browserģ) Run the task to get the data extractedĪfter clicking "Save URL", you enter the task configuration interface. In this tutorial, we will guide you through 3 main steps of creating a task with Advanced Mode and cover the unique features of Advanced Mode.ġ. If the website you are going to scrape is very simple, you can begin your first data hunting trip with Wizard Mode.

customize your workflow, such as set up a wait time, modify XPath and reformat the data extracted.design a workflow to interact with webpage such as login authentication, keywords searching and opening a drop-down menu.extract data like text, URL, image, and HTML.achieve data scraping on almost all kinds of web page.Go to have a check now!Īdvanced Mode is a highly flexible and powerful web scraping mode. For people who want to scrape from websites with complex structures, we strongly recommend Advanced Mode to start your data extraction project. Please note that some results might not be available due to being locked behind a paywall.The latest version for this tutorial is available here.

It can be then exported to various formats, such as JSON, XML, CSV or Excel. The actor stores its result in the default dataset associated with the actor run. In terms of platform usage credits, scraping 20 websites takes about 0.02 compute units (depending on proxy and number of retries). You can use either Apify Proxy or provide your own custom proxies. You need to use proxies when scraping from the Apify platform.Īny type of proxy (datacenter/residental/custom) should be OK.

You can specify multiple websites to get multiple results on one run. The actor only needs to know which websites to get information from, and which proxies to use. You can use this scraper in order to get information about the popularity of certain websites, which you can then use for market research, to track potential competitors, or for other research.

0 kommentar(er)

0 kommentar(er)